MLSys 2020 Recap

Posted on Wed 04 March 2020 in research

This week, I attended the third Conference on Machine Learning and Systems (MLSys) in Austin, TX. It was a great experience and I thought I would record some of my thoughts and observations from attending the conference.

Demonstration of Ballet

First off, the reason I was attending in the first place was to present a demonstration of my research project Ballet, a framework for open-source collaborative feature engineering. I was really excited to share this project with the MLSys community, try out a live demo, and get some feedback. As some brief background, this project started from the observation that though open-source software development is a highly-collaborative discipline supported by mature workflows and tooling, data science projects, in contrast, are usually developed by individuals or small co-located teams1. What would it take to scale the number of committers to a single data science project to the order of one hundred or one thousand?

The goal is thus to make data science development more like open-source software development: collaborative, modular, tested, scalable. In Ballet, we identity feature engineering as one process that can be decomposed into modular pieces: the definitions of individual features. These features can be written in separate Python source files in 10 or so lines of code, using our simple API based on declaring inputs from a fixed data frame and specifying a scikit-learn style transformer or sequence of transformers. The underlying Ballet framework is then responsible for thoroughly validating submitted features and collecting individual features from the file system into an executable feature engineering pipeline. Though potential contributors to a Ballet project can hack on the repository directly using their preferred tooling, we also facilitate a workflow that meets data scientists "where they are" in terms of development environment, allowing repositories to be launched using Binder into an interactive Jupyter Lab environment. Data scientists can develop features within the notebook and then submit them to the underlying project using our Lab extension, which takes care of formatting the selected source code and submitting it as a pull request under the hood.

In the demo, I invited those who came to my booth to join a live feature engineering collaboration to predict house prices for a dataset of homes in Ames, Iowa, given characteristics about each house. You can see the exact demo here.

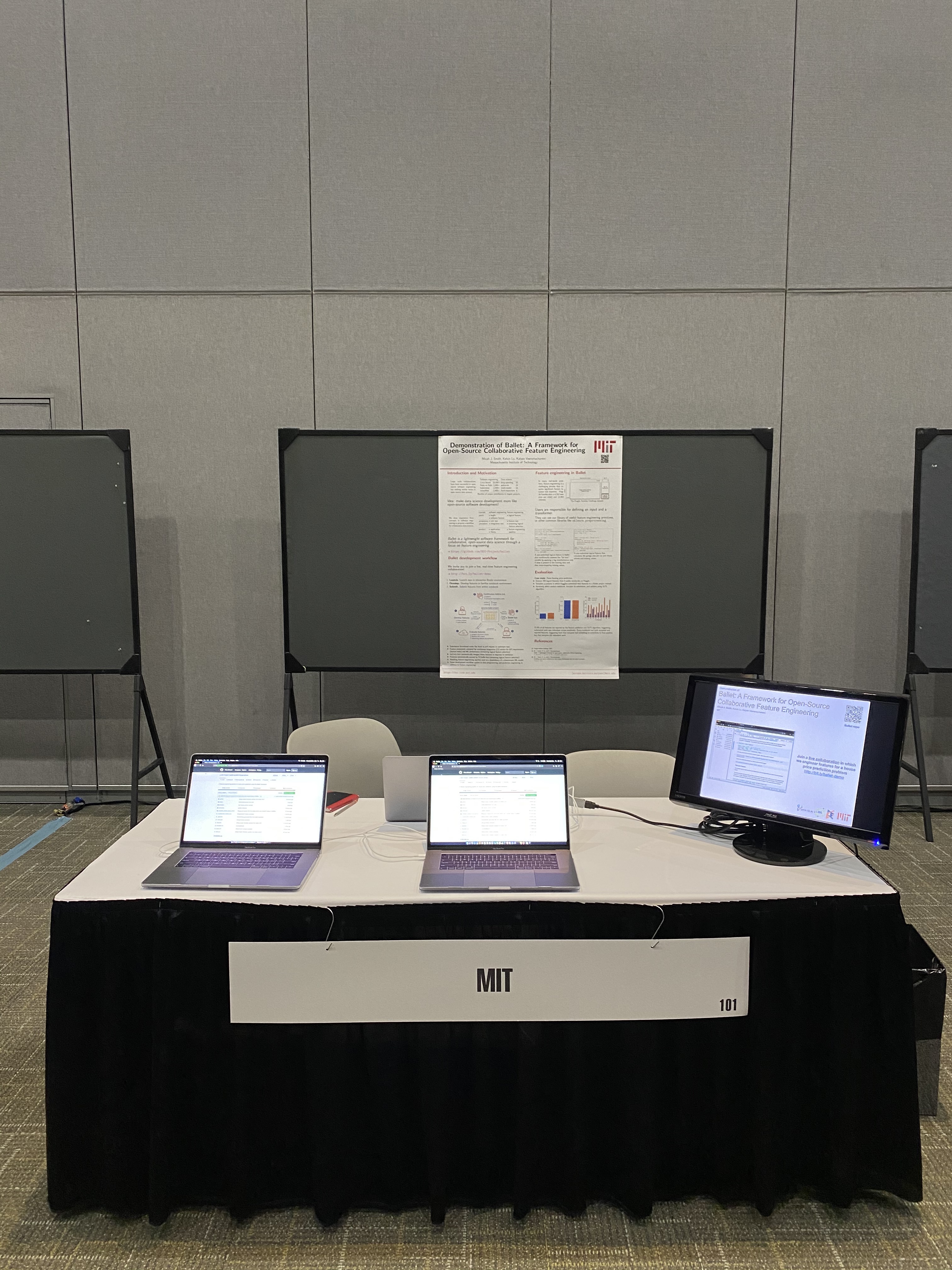

In my booth above, you can see the two laptops that I lugged from Cambridge as well as the 20" monitor that cost an eyepopping $400 to rent from the conference-approved AV vendor. Other demonstrators had received quotes of $1200 - $2000 to rent TVs for the three hour demonstration session. One group creatively bought a brand new TV from Target nearby and were planning on returning it the next day. Even if one bought a new TV and threw it on the street afterwards, it would be less expensive than renting it from the conference vendor...

Luckily, my booth was fairly well-attended. Whether this is related to the fact that my

booth was right next to the bar and a table of appetizers including fried chicken sliders

and mini-enchiladas is left as an exercise to the reader. I had some great conversations

with a bunch of folks and had some people try to contribute to the

ballet-predict-house-prices project live in the launched Binder repo. Many people were

interested in my vision of open-source and collaborative data science. But I also found that

many people had stopped by my booth as they found feature engineering to be a major

challenge within their organization or within projects they had worked on.

You can read more short paper on the demonstration here and I am always happy to chat about this project with anyone who is interested, either in person or via email.

Everything else

I really enjoyed the conference, seeing a lot of great research and meeting a bunch of new folks in the systems and ML space. Here are a couple of projects that stood out to me:

-

MotherNets: Rapid Deep Ensemble Learning, by Abdul Wasay, Brian Hentschel, Yuze Liao, Sanyuan Chen, Stratos Idreos (Harvard). This paper focuses on training ensembles of deep neural nets. While ensembles are usually great for everything, in the context of deep learning where training costs are already quite high, training an ensemble usually requires a linear increase in training time, which can be prohibitive. Previous research had proposed methods of speeding up this process, such as by creating snapshot ensembles in which after each $n$ epochs a snapshot of the model is taken and the snapshots are ensembled together after training is completed. The cost to train a snapshot ensemble is the same as the cost to train a single model, and interestingly they still perform better than a single model. However, they are limited to use a single model architecture. The idea behind MotherNets is to allow many model architectures to be used but still speed up training. First, the "mother net" is extracted out of the separate architectures, this is defines as the "greatest common architecture", such that the separate architectures are all strictly expansions of the common architecture. The MotherNet is then trained to convergence, at which point it is used to "hatch" models of the separate architectures. This is done by a function-preserving transformation, an interesting process in which layers are added or expanded and existing weights are changed such that the function computed by the new, expanded model is the same as that of the original model. Then the hatched models are also trained to convergence. The result is that the desired set of architectures can be collectively trained to convergence faster than if they were each trained separately. The authors show that the MotherNets ensemble allows a practitioner to choose from a Pareto frontier of approaches trading off accuracy and training speed.

-

What is the State of Neural Network Pruning?, by Davis Blalock, Jose Javier Gonzalez Ortiz, Jonathan Frankle, John Guttag (MIT). The authors, fellow grad students at MIT, tried to figure out what was the best method for neural network pruning. They ended up instead conducting an extremely thorough survey and meta-analysis of 81 pruning methods. They found that while pruning works, it may be better to just use a model architecture that is more efficient in the first place (i.e. use EfficientNet rather than a pruned version of VGGNet). And due to differences and inconsistencies in evaluation methodology, it was impossible to determine the "best" pruning methods. For example, out of the papers they surveyed that claimed to be new SOTA methods, 40% were never compared against by future works.

-

Riptide: Fast End-to-end Binarized Neural Networks, by Joshua Fromm, Meghan Cowan, Matthai Philipose, Luis Ceze, Shwetak Patel (UW, OctoML, MSR). Traditionally, weights in a neural network have been represented by 32-bit floating point numbers. To make conventional neural networks faster and more compact, one can instead quantize these weights, even to the point of representing them as single bits. While previous authors have reported theoretical speedups from this technique, no-one had previously actually run anything faster, due to challenges such as missing library support for operations on binarized weights and lack of targeted optimizations. The authors introduce a bunch of techniques that each contribute to the performance of binarized networks, such as bitpack fusion, fused glue operators, tiling, and vectorization. All together, they are able to achieve speedups of 4-12x compared to full-precision implementations of SOTA models at comparable accuracy.

This is just a selection of the great papers that were presented, and I encourage you to look through the full proceedings (which are quite manageable in length, unlike other ML conferences cough cough) if you are interested.

The conference was single track and fairly small, at about 450 registrations, but fewer than that actually attended, as the coronavirus scare is ongoing and many also have had travel restrictions imposed by their organizations. As a result, we all applied hand sanitizer regularly, touched elbows instead of shaking hands, and overall got to talk about machine learning and systems in a more intimate setting. There was a significant industry presence and a lot of the most interesting work is being done in industry, to be sure. I hope to be back next year when the conference will be located in the Bay Area.

1: By data science project, I mean an end-to-end pipeline that can actually make predictions for new data instances. An ML library like Tensorflow is not a data science project in this respect.